Map Tasks in Flyte

MapReduce is a prominent terminology in the Big Data vocabulary owing to the ease of handling large datasets with the “map” and “reduce” operations. A conventional map-reduce job consists of two phases: one, it performs filtering and sorting of the data chunks, and the other, it collects and processes the outputs generated.

Consider a scenario wherein you want to perform hyperparameter optimization. In such a case, you want to train your model on multiple combinations of hyperparameters to finalize the combination that outperforms the others. Put simply, this job has to parallelize the executions of the hyperparameter combinations (map) and later filter the best accuracy that could be generated (reduce).

A map task in Flyte intends to implement the task mentioned above. It is primarily more inclined towards the “map” operation.

Referring to Dynamic Workflows in Flyte blog before proceeding with this piece can help deepen your understanding of the differences between a dynamic workflow and map task; however, it isn’t mandatory.

Introduction

A map task handles multiple inputs at runtime. It runs through a single collection of inputs and is useful when several inputs must run through the same code logic.

Unlike a dynamic workflow, a map task runs a task over a list of inputs without creating additional nodes in the DAG, providing valuable performance gains, meaning a map task lets you run your tasks spanning multiple inputs within a single node.

When to Use Map Tasks?

- When there’s hyperparameter optimization in place (multiple sets of hyperparameters to a single task)

- When multiple batches of data need to be processed at a time

- When a simple map-reduce pipeline needs to be built

- When there is a large number of runs that use identical code logic, but different data

Map Task vs. Dynamic Workflow

A map task’s logic can be achieved using a <span class=“code-inline”>for</span> loop in a dynamic workflow, however, that would be inefficient if there are lots of mapped jobs involved.

A map task internally uses a compression algorithm (bitsets) to handle every Flyte workflow node’s metadata, which would have otherwise been in the order of 100s of bytes.

We encourage you to use a map task when you want to handle large data parallel workloads!

Usage Example

A map task in Flyte runs across a list of inputs, which ought to be specified using the Python <span class=“code-inline”>list</span> class. The function you want to call your map task on has to be decorated with the <span class=“code-inline”>@task</span> decorator, and itself has to conform to a signature with one input and, at most, one output.

At run time, the map task will run for every value. If TaskMetadata or Resources is specified in the map task using overrides, it is applied to every input instance or mapped job.

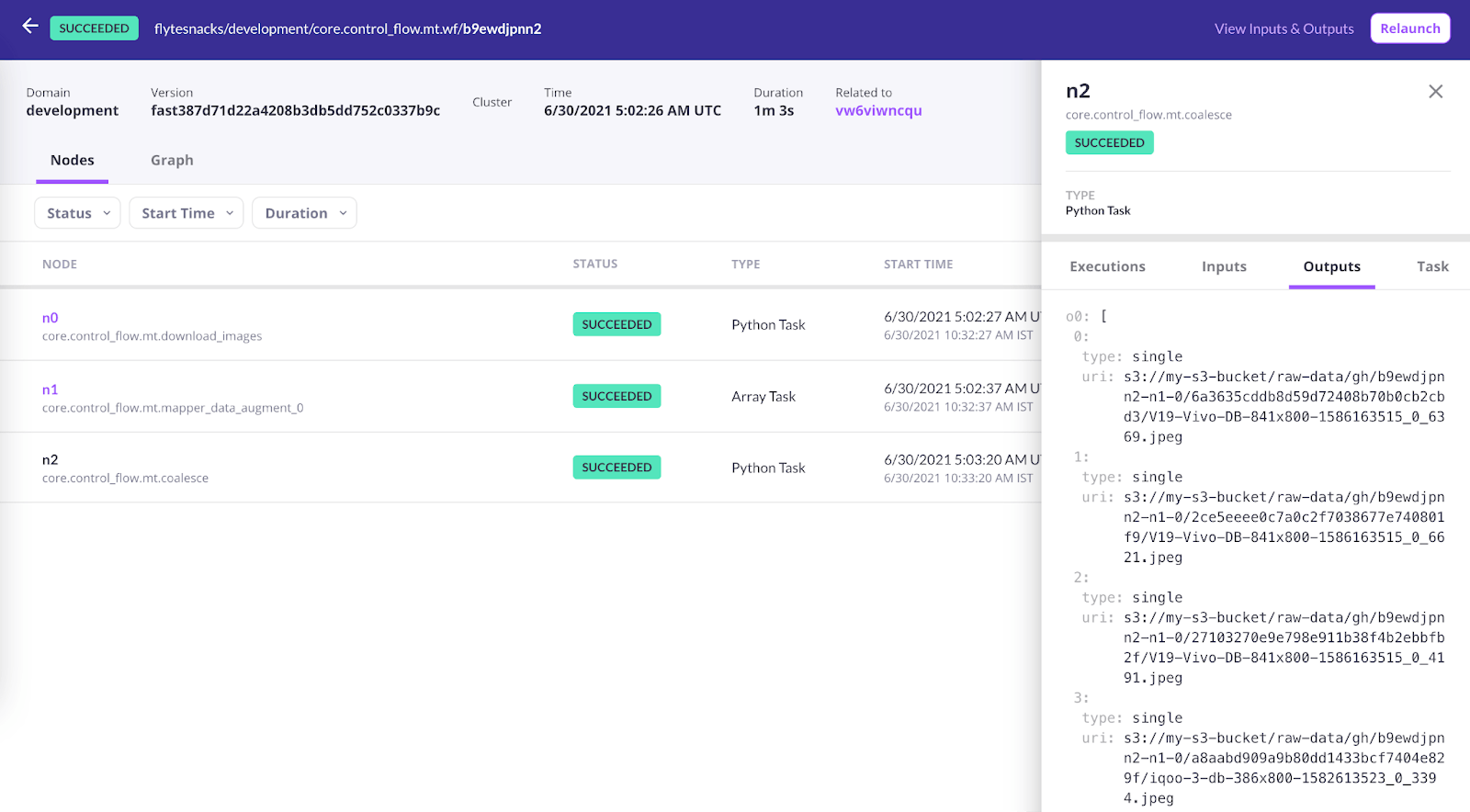

Here’s an example that “spins up data augmentation of images in parallel using a map task”.

Let’s first import the libraries.

Next, we define a <span class=“code-inline”>task</span> that returns the URLs of five random images based on the given query from Google images.

<span class=“code-inline”>FlyteFile</span> automatically downloads the remote images to the local drive.

We define a <span class=“code-inline”>task</span> that generates new images given an image. Memoization (caching) is enabled for every mapped task. The task, in the end, stores the augmented images in a common directory, which we segregate and return as a list of <span class=“code-inline”>FlyteFiles</span>.

We define a <span class=“code-inline”>coalesce</span> task to convert the list of lists to a single list.

Finally, we define a workflow that spins up the <span class=“code-inline”>data_augment</span> task using the <span class=“code-inline”>map_task</span>. A map task here helps in triggering the jobs for the list of images in hand within one node. In resource-constrained cases (when the total number of resources is less than the aggregate required for concurrent execution), Flyte will automatically queue up the execution and schedule as and when resources are available.

You can also define a map-specific attribute called <span class=“code-inline”>min_success_ratio</span>. It determines the minimum fraction of the total jobs which can complete successfully before terminating the task and marking it as successful.

On running this, the output will be a list of files that consist of the augmented images.

Here’s an animation depicting the data flow through Flyte’s entities for the above code:

List of future improvements (coming soon!):

- Concurrency control

- Auto batching: Intelligently batch larger workloads onto the same pods

Reference: Keras Blog

Cover Image Credits: Photo by Parrish Freeman on Unsplash